RespAI Lab

Welcome to the RespAI Lab!

KIIT Bhubaneswar, India

Welcome to our research lab, led by Dr. Murari Mandal. At RespAI Lab, we focus on advancing large language models (LLMs) by addressing challenges related to long-content processing, inference efficiency, interpretability, and alignment. Our research also explores synthetic persona creation, regulatory issues, and innovative methods for model merging, knowledge verification, and unlearning.

Motto of RespAI Lab: Driving technical breakthroughs in AI through cutting-edge research and innovation, with a focus on solving complex challenges in LLMs and other generative models and contribute to top-tier conferences (ICML, ICLR, NeurIPS, AAAI, KDD, CVPR, ICCV, etc.) in the pursuit of cutting-edge advancements.

Note for prospective students interested in joining RespAI research group. Responsibilities and duties of both existing and aspiring members..

"When you go to hunt, hunt for rhino. If you fail, people will say anyway it was very difficult. If you succeed, you get all the glory"

Ongoing Research at RespAI Lab

-

Advanced Reasoning in LLMs: Exploring techniques to enhance complex reasoning abilities in LLMs, focusing on multi-step problem solving, logical inference, and contextual understanding to improve decision-making and generate more reliable outcomes.

-

Interpretability and Alignment of Generative AI Models: Exploring the interpretability of generative AI models, aligning their outputs with human values, and addressing the issue of hallucinations in model responses.

-

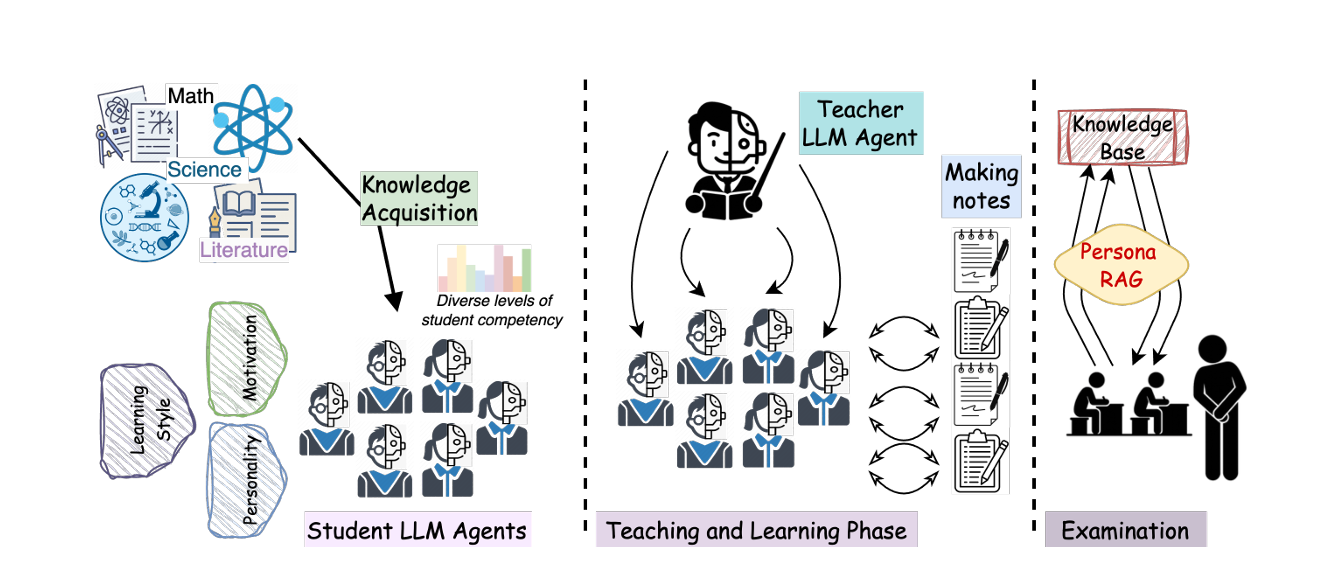

Synthetic Persona and Society Creation: Creating and studying synthetic personalities, communities, and societies within LLMs, and analyzing the behaviors and dynamics of these synthetic constructs.

-

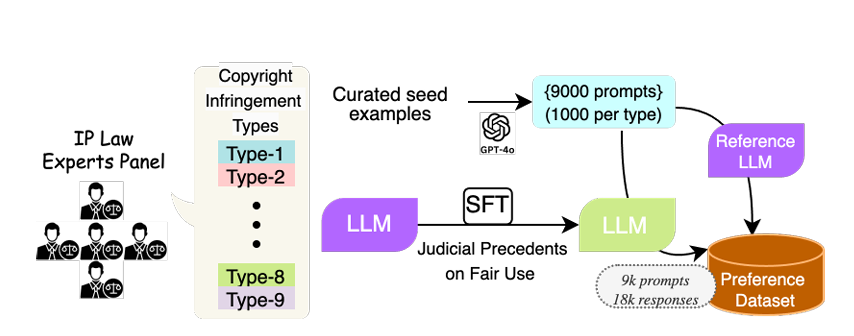

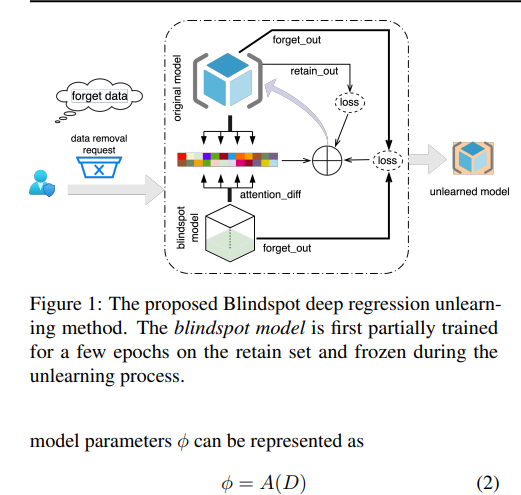

Regulatory Challenges in LLMs: Investigating regulatory concerns surrounding LLMs, including the implementation of unlearning techniques to comply with data privacy regulations and enhance model fairness.

-

Model Merging and Knowledge Verification: Developing methods for merging multiple models, editing model behavior, and verifying the accuracy and consistency of the knowledge they generate.

Recent News

| Aug 21, 2025 | Paper accepted to the EMNLP 2025 Main Track, Suzhou, China [Acceptance Rate - 22.16%]. Congratulations Debdeep 🎉🎉 |

|---|---|

| Aug 21, 2025 | Paper accepted in EMNLP 2025 Findings, Suzhou, China [Acceptance Rate - 17.35%]. Congratulations Aakash 🎉🎉 |

| Jul 17, 2025 | Guardians of Generation: Dynamic Inference-Time Copyright Shielding with Adaptive Guidance for AI Image Generation has been accepted to Unlearning and Model Editing Workshop at ICCV 2025!🎉 |

| Jul 08, 2025 | Agents Are All You Need for LLM Unlearning has been accepted to #COLM2025!!🎉 |

| May 31, 2025 | Preprint of “OrgAccess: A Benchmark for Role Based Access Control in Organization Scale LLMs ” is available on Arxiv. |

| May 25, 2025 | Preprint of “Investigating Pedagogical Teacher and Student LLM Agents: Genetic Adaptation Meets Retrieval Augmented Generation Across Learning Style” is available on Arxiv. |

| May 25, 2025 | Preprint of “Nine Ways to Break Copyright Law and Why Our LLM Won’t: A Fair Use Aligned Generation Framework ” is available on Arxiv. |

| May 05, 2025 | Check out our insightful talk on advancements in machine translation and LLMs for Indian languages with Professor Raj Dabre and the full discussion is available on YouTube: https://youtu.be/gZOuzDG5B-w?si=ql3zfjt79ren2PAZ. |

| Apr 25, 2025 | Dr. Murari Mandal joins BrainXAI team to collaborate on cutting edge AI in Healthcare research! |

| Apr 10, 2025 | Preprint of “Right Prediction, Wrong Reasoning: Uncovering LLM Misalignment in RA Disease Diagnosis” is available on Arxiv. |

| May 23, 2024 | 🎉 Paper Acceptance to KDD 2024! 🎉 |

|---|

Selected Publications

-

Investigating Pedagogical Teacher and Student LLM Agents: Genetic Adaptation Meets Retrieval Augmented Generation Across Learning StyleIn The 2025 Conference on Empirical Methods in Natural Language Processing , 2025

Investigating Pedagogical Teacher and Student LLM Agents: Genetic Adaptation Meets Retrieval Augmented Generation Across Learning StyleIn The 2025 Conference on Empirical Methods in Natural Language Processing , 2025 -

-

Deep Regression UnlearningIn Proceedings of the 40th International Conference on Machine Learning , 23–29 jul 2023

Deep Regression UnlearningIn Proceedings of the 40th International Conference on Machine Learning , 23–29 jul 2023 -

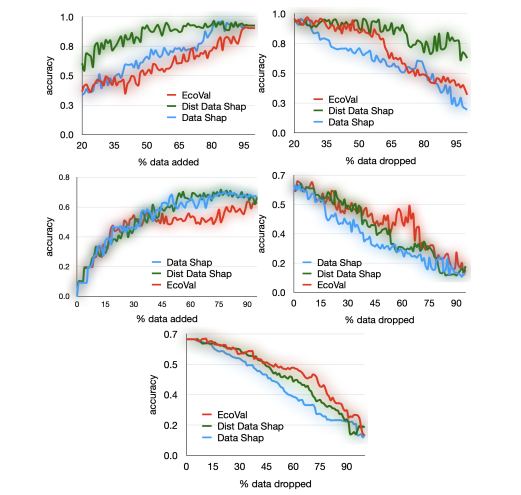

EcoVal: An Efficient Data Valuation Framework for Machine Learning23–29 jul 2024

EcoVal: An Efficient Data Valuation Framework for Machine Learning23–29 jul 2024 -

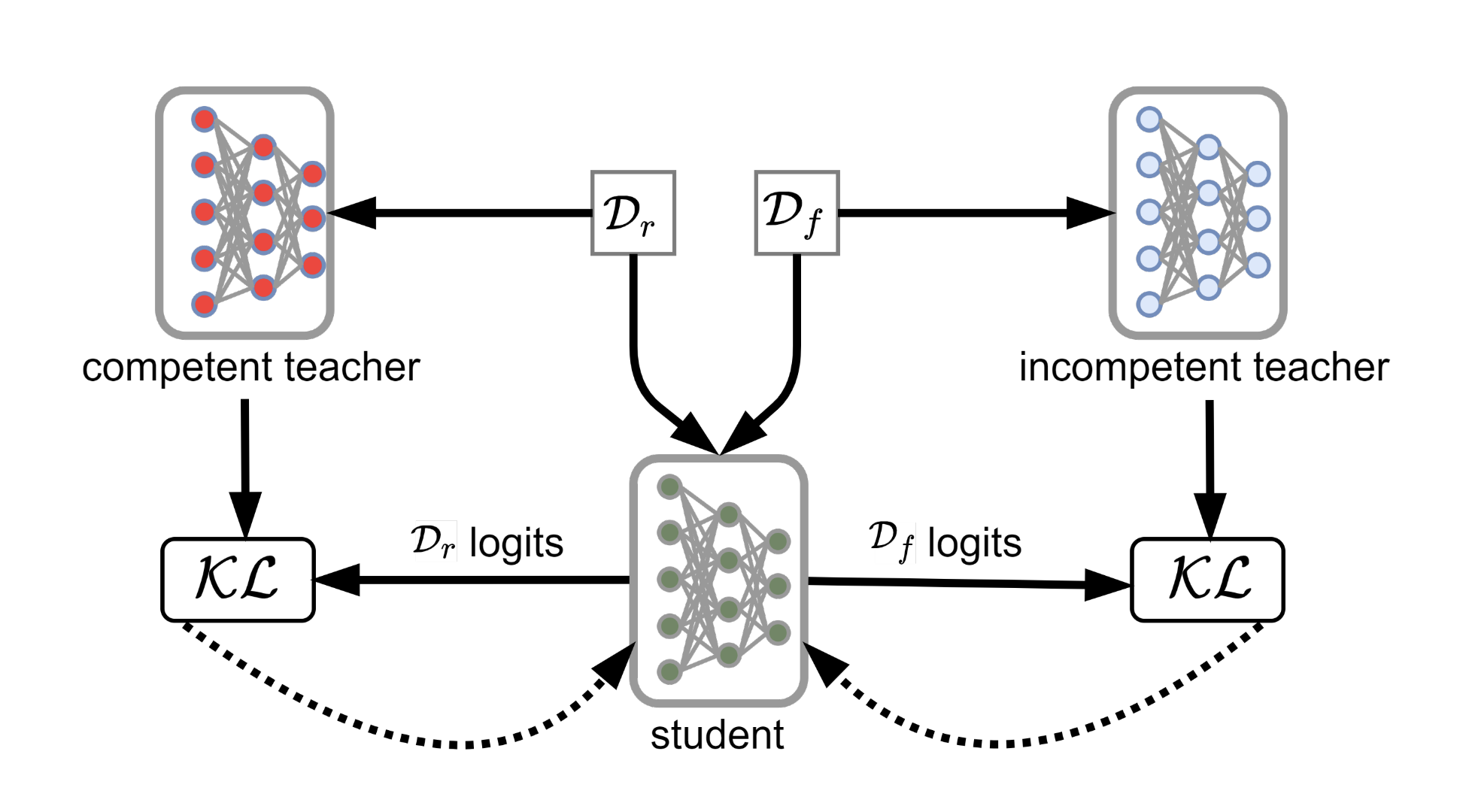

Can Bad Teaching Induce Forgetting? Unlearning in Deep Networks Using an Incompetent TeacherProceedings of the AAAI Conference on Artificial Intelligence, Jun 2023

Can Bad Teaching Induce Forgetting? Unlearning in Deep Networks Using an Incompetent TeacherProceedings of the AAAI Conference on Artificial Intelligence, Jun 2023